Authors: Ab Bertholet, Nienke Nieveen & Birgit Pepin.

Abstract

Risk decisions by professionals in safety and security regularly appear to be made more irrationally and biased than effectively and efficiently. A sector where fallacies in risk decision making constitute a key issue for management is airport security. In this article we present the results of an exploratory mixed methods study regarding the question: How and to what extent can we train professionals, in order to help them make smarter risk decisions? This study is designed as a controlled experiment, after qualitative preliminary phases with interviews and document analysis. The main conclusion is that the workshops we offered to airport security agents had a positive effect on the awareness and risk decisions of the intervention group. Next to the awareness effect, the intervention group showed less biased self-evaluation and was capable of identifying individual, collective and organisational points for improvement. We mute that these results should only be considered as first indications of effect. As the experiment was embedded in the normal working day of the security agents, the context variables entirely could not be controlled. With a combination of quantitative and qualitative data we tried to compensate this as much as possible.

1 Introduction

Risk management is a critical task in the fields of safety and security. This is the daily work of many thousands of professionals in healthcare and rehabilitation, child welfare, transport, industry, the police and fire services, events management, and prevention of terrorism. The decisions on risk are aimed at increasing safety and security, and at limiting potential damage. For some professions risk management is core business, but most often it is a secondary responsibility. In daily practice however, risk decisions made by professionals in various sectors regularly appear to be made more irrationally and biased than effectively and efficiently. In this article, we present a generic process model of biased risk decision making by professionals in safety and security management, derived from scientific literature and empirical practice. It provides generic insights into a general problem with human factors in risk management practice.

In this study we are concentrating on the example of security checks at the international airport of Amsterdam, in the form of a randomised controlled experiment with a risk competence training. The focus is on effective learning of risk decision making skills. The central question of the experiment was: How and to what extent can we train professionals, in order to help them make smarter (i.e. more rational, effective and efficient) risk decisions? In the subsequent sections, we describe the preliminary study, the design of the training and the experiment, and we present the results of the study. Finally, the effect of the intervention is discussed.

2 Process Model of Biased Risk Decision Making

Over past decades proof of mental obstacles and reflexes that hinder rational (risk) decision making can be found in numerous studies on this topic. Although the issue is well-known and support tools (e.g. protocols and checklists) are available, in daily practice all kinds of professionals are making risk decisions that are unsatisfactory, i.e. partly or entirely ineffective or inefficient (Bertholet, 2016a, 2016b). The main sources of bias found in the literature are insufficient numeracy and risk literacy. Increasing the professionals’ theoretical knowledge by teaching reckoning and clear thinking is no conclusive remedy for professional practice and cognitive biases cannot be cured on a cognitive level only (Kahneman, 2011). Specific risk competence training is even more important (Gigerenzer, 2003, 2015).

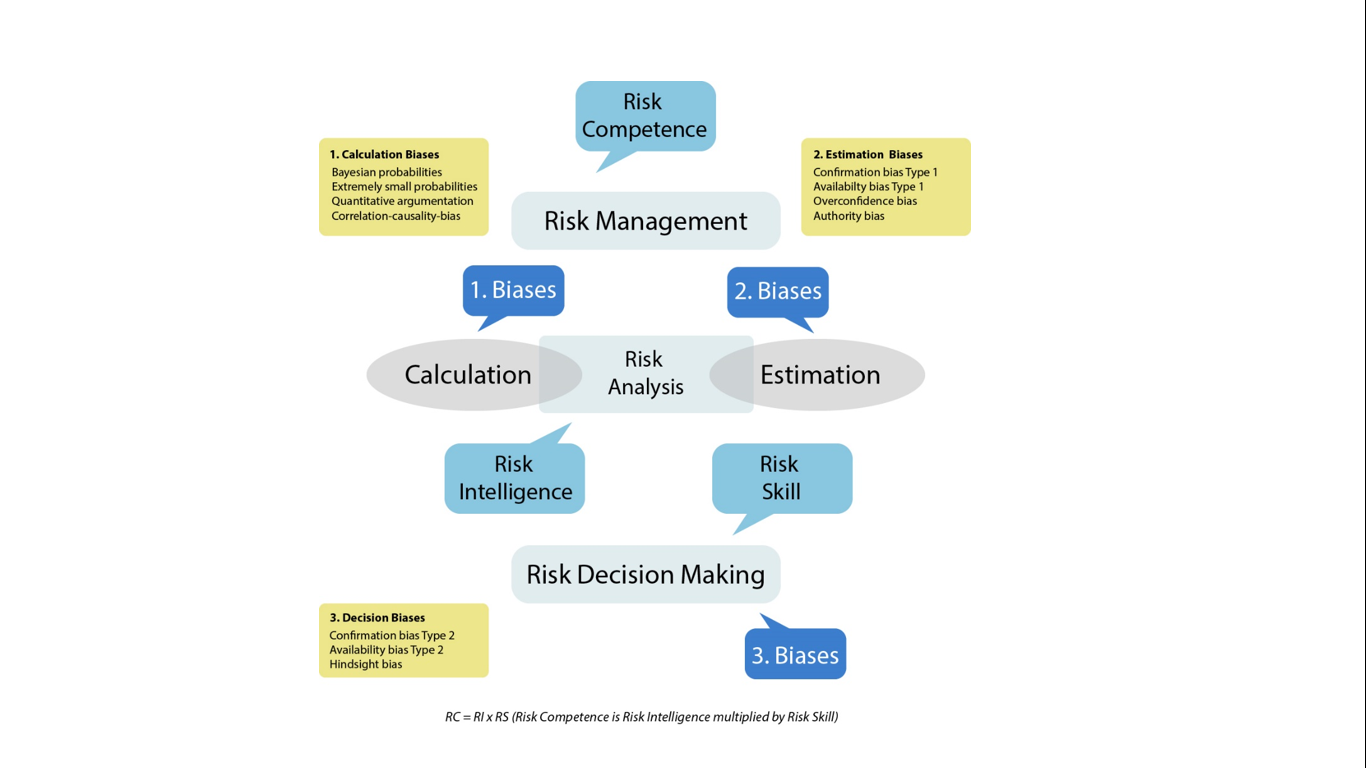

Our concept of risk competence including a process model of biased risk decision making (as displayed in Figure 1) is based on a theoretical and empirical literature review (Bertholet, 2016b).

From common risk management models, we focus on the two critical phases where fallacies lurk: 1. the analysis or judgement phase, and 2. the decision-making phase. In the safety and security domain, there are two main methods of risk analysis: calculation and estimation. Both methods can be distorted by mental patterns and fallacies in the human brain. The extent to which professionals are able to apply available methods and instruments of risk assessment we call risk intelligence (Evans, 2012). On the basis of their risk analysis, professional risk managers take a risk decision whether or not to implement a particular intervention. Biases also occur within the risk decision itself. If professionals react effectively and efficiently, then they are said to have a high level of risk skill.

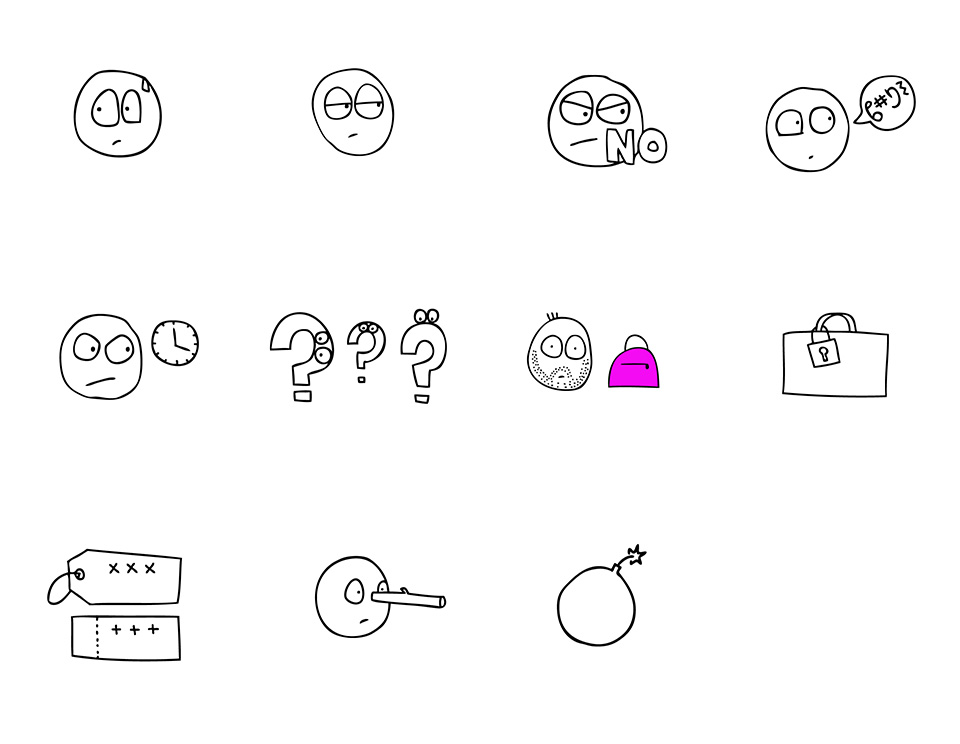

In the model, we consider Risk Intelligence (RI) as the indicator of risk analysis and Risk Skill (RS) as the indicator of risk decision making. The product of both indicators we call Risk Competence (RC), which is the overall indicator for risk management: RC = RI x RS. (Evans, 2012; Gigerenzer, & Martignon, 2015; Bertholet, 2016b). Based on field interviews and the literature (Kahneman, 2011; Gigerenzer, 2003; Dobelli, 2011, 2012; Bertholet, 2016b, 2017), we selected eleven biases for our study that we regarded as most relevant for safety and security practice. To illustrate the process model, cartoons of all eleven biases were designed and are presented in an animated 9-minute video: https://youtu.be/4rWPppdJ3YQ. The eleven biases are clustered into three groups.

Calculation biases

Numbers and percentages appear to express a risk in a quantitative and precise manner. In practice, professionals find it hard to grasp the precise meaning of chance, probability and other quantitative data. Paulos (1988), Kahneman, & Tversky (1979), Gigerenzer (2003), and Kahneman (2011) have been writing for decades about ‘innumeracy’, caused by cognitive illusion. Conditional and extremely small probabilities, statistical argumentation and causality biases cause the biggest problems in ‘reckoning with risk’ (Gigerenzer, 2003).

Estimation biases

Risks that cannot be calculated must be estimated. That is for example often the case with social safety (Gigerenzer, 2003; Dobelli 2011, 2012). In risk analysis, professionals often seek confirmation of risks they are already aware. The danger is that in focusing on a single risk profile, they may miss the bigger picture. This is known as confirmation bias. Authority bias occurs when a professional, who is either higher in the hierarchy, or more experienced, is not corrected by colleagues despite their superior analysis. It is assumed that the authority’s analysis is more accurate. The overconfidence effect is the mirror image of authority bias. Even experienced professionals can sometimes wrongly assume that they are correct. They may overvalue their own capacities, or the probability of success of a project, and they may underestimate the risks involved. Availability bias in the analysis phase causes professionals to trust readily available information about risk rather than to be aware of less visible data.

Decision biases

Because of biases, fallacies, thinking errors or distortions, which appear in the analysis phase, optimal and rational judgements can no longer be made during the decision phase. Apart from this, also in the decision phase biases lurk when it comes to determine whether the risk analysis suggests intervention and if so, which one (Gigerenzer, 2003; Dobelli 2011, 2012). At this point, confirmation bias of a second type can arise. When a professional’s assessments are endorsed, greater and more dangerous risks may be ignored. In the decision phase, we can also see a fallacy, which we call availability bias of the second type. Professionals tend not to choose the best intervention or therapy or policy; they choose the remedy they already know, the one that is at the forefront in their mind. Hindsight bias is a fallacy exhibited by more than just professionals. It may also affect public opinion, the media and politicians more deeply. Subsequently, it is easy to conclude that something else should have happened or that action should have been taken earlier.

3 Study with airport security agents: Context analysis

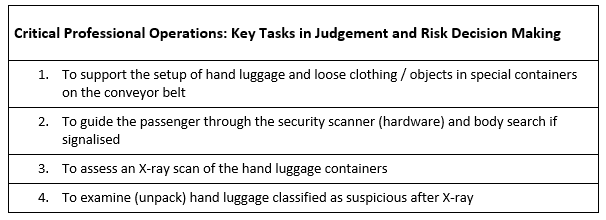

A sector where fallacies in risk analysis and decision making constitute a key issue for management, is airport security. In the control of passengers and hand luggage in civil aviation, security agents are deployed to prevent persons or objects on board which may endanger the safety on board airplanes. Agents make risk decisions about passengers and luggage items, by classifying persons, behaviour, situations and objects as safe, suspicious or dangerous. Their critical operational tasks in risk decision making are displayed in Figure 5. The quality indicators of the airport security operation are measured by inspections and by sampling. Samples are dangerous or otherwise prohibited items carried by so called ‘mysterious guests’ on their body or in their hand luggage, which must then be intercepted by the security agents.

Improving security agents’ performance in risk decision making could make a significant contribution to the security of the airport. Although there is a focus on avoiding fallacies during the agents’ initial training and afterwards in periodic training, the problem of fallacies in the process of decision making often remains. Hence, human factors in airport security are considered a systematic, thus predictable weak link (Kahneman, & Tversky, 1979; Gigerenzer, 2003; Ariely, 2008; Kahneman, 2011; Dobelli, 2011, 2012). This is why we set up our study at the request of an airport security company.

First, we investigated the context and the target group of security professionals via observation, and interviews, and document analysis (e.g. manuals, working instructions, procedures, training materials). Based on the findings of the preliminary study we transferred the generic model (Figure 1) into an empirical model for the specific professional domain of the airport security agent, by ranking the biases by relevance. We identified confirmation, availability, authority, overconfidence and hindsight bias as crucial fallacies. For security agents in the analysis phase the focus was on estimation, more than on calculation. Furthermore, particular preconditions and stress factors apply to the decision-making process, such as time and peak pressure. What the critical professional tasks had in common was that they had been carried out according to established procedures, and that meta-level vigilance of agents was required to watch simultaneously specific indicators for deviant of suspicious behaviour (Figure 3). These included for example luggage that did not seem to fit the specific passenger or a passenger who seemed to be extremely hurried, curious or otherwise behaved differently.

4 Training intervention design

This section describes the design of the training intervention, including its objectives, the underlying training concepts, and corresponding features. The intervention focused on two goals for the short term, set jointly by the security company and the research team: (a) agents performing better at selected critical professional tasks and (b) defining performance norms for agents’ performance. The specific learning goals of the training intervention for the security agents were: (a) to acquire knowledge of common fallacies in assessing risks and making risk decisions; (b) to recognise and be aware of these errors in their own work and behaviour; (c) to explore opportunities to avoid or reduce fallacies in the context of their own work.

Conceptual dimensions

The design of the intervention was based on four concepts regarding vocational learning, respectively learning in general: competence based learning (Mulder, 2000), self-responsible learning (Schön, 1983; Zimmerman, 1989), collaborative learning (Vygotsky, 1997) and concrete learning (Hattie, 2009; De Bruyckere, Kirschner, & Hulshof, 2015).

Competence-based learning refers to the European Qualifications Framework for Life Long Learning (European Commission, n.d.), where competences are regarded as a third qualification area, next to knowledge and skills. For risk decision making in general and therefore also for the security checks at airports, awareness of fallacies and cognitive biases is essential. Both competence-based learning and self-responsible learning require awareness of the bias effect of human intuition and perception. The theoretical dimension of self-responsible learning focuses on self-regulation, the professionalising effect on the individual (Schön, 1983; Vygotsky, 1997). Professionals who want to improve their work performance must be able to reflect on their professionalism. ’Reflective practitioners’ (Schön, 1983), can evaluate their own actions at a metacognitive level and have a picture of the path that brings them to the level of the professionals that they would like to be. Self-constructs such as self-esteem and self-efficacy are important indicators in this context, which we used as a measure of the professionals’ self-image (Bandura, 1977; Zimmerman, 1989; Judge, & Bono, 2001; Ryan, & Deci, 2009). Where scores on an assignment or a test can be considered as an external measure of risk competence, self-scores by professionals themselves can be an internal mirror image. For that reason, we asked security agents to score their own self-esteem and self-efficacy (Table 2). Self-esteem refers to the more general ratings for the professional’s performance level and stage of professional development. Self-efficacy is the belief in someone’s own capacity to succeed at specific tasks (ibid).

Collaborative learning in strong partnership with others offers opportunities to achieve better results compared to individual learning, as long as applicable design principles are respected (Valcke, 2010; Johnson, & Johnson, 2009; Hattie, 2009). The instructional principle of ‘concrete learning’ (Hattie, 2009; De Bruyckere, Kirschner, & Hulshof, 2015) claims a better learning achievement when working with realistic cases and training materials from practice, rather than with academic and theoretical exercises. This means that agents needed to recognise and acknowledge assignments and case studies as originating from and relevant to their daily work. For the dimensions of competence-based and collaborative learning, authentic rather than academic content might have had a positive effect mainly on the motivation of the agents (Ryan, & Deci, 2009). Specific choices in the intervention design (e.g. visualisation as an instructional strategy, group assignments as a metacognitive reflection strategy) were based on insights of concrete learning as well (Hattie, 2009; Gigerenzer, 2015; Kirschner, 2017).

A teaching strategy that is built on spaced learning, repetition, cyclical training of the right way of thinking and decision making, can ensure retention and securing the learning achievement. Training in groups can also lead to more active and metacognitive processing (Hattie, 2009; De Bruyckere, Kirschner, & Hulshof, 2015; Johnson, & Johnson, 1999). We used these principles in the instructional strategies and methods, as well as in the production of all the training materials: Figures 2, 3 and 4.

Content of the intervention

The training offered to an intervention group of about sixty agents consisted of two workshop sessions and an extensive training of four weeks, with one risk decision to be responded to by the participants every weekday. An intervention group and a control group both completed a pre-test and a post-test.

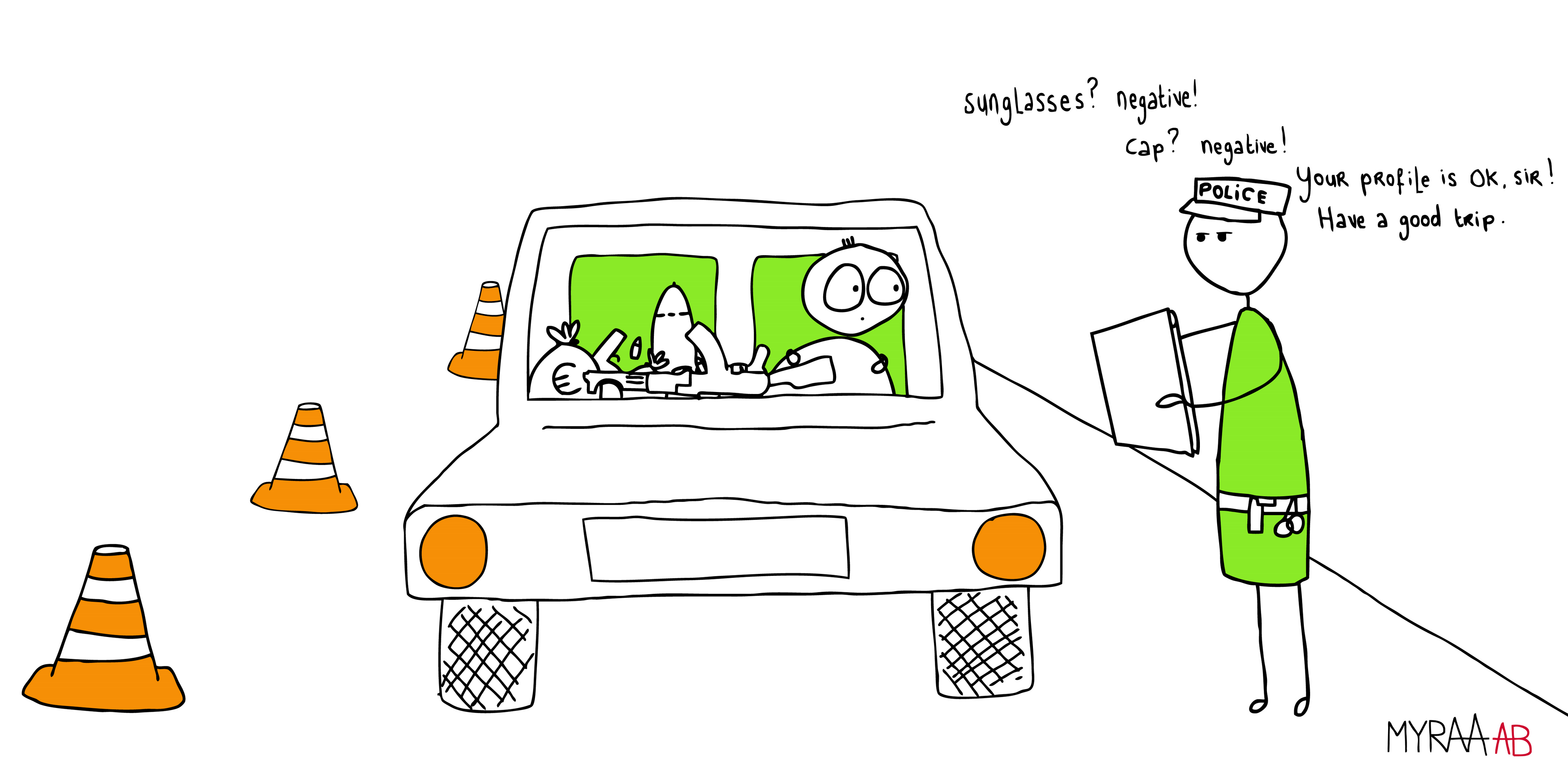

From the preliminary study, we compiled an inventory of problematic risk decisions as they occurred in the daily practice of the airport. Based on this inventory, we developed the training material: 50 test items around mini-cases, each with one realistic risk decision from daily practice. In addition, the biases from the generic process model were visualized in cartoons (Figure 2) and the ‘suspicious indicators’ were transformed into pictograms (Figure 3). We changed the traditional way of knowledge transfer in the form of written or spoken text by visual transfer media containing the essential points (Figures 2, 3).

We transferred the eleven cognitive biases from the generic process model (Figure 1) into cartoons, which define the respective fallacies – symbolically, exemplarily and in an instantly recognisable manner, straighter than written text can do. Figure 2 is an example of this (Confirmation bias Type I). In risk analysis, safety and security professionals often look for confirmation of risks they are already aware of. The danger is that in focusing on a single risk profile they may miss the bigger picture. This is known as confirmation bias (Dobelli, 2011). It occurs in all kinds of profiling activities, from police surveillance to intelligence and security services. It is not a theoretical concept, but the metaphor in the drawing should help the professionals recognising the situation and apply it in their own practice. In the training session, we discussed the situation in the cartoon with the agents and asked them to apply it to their own daily practice: “What kind of risks in the bigger picture do my colleagues and I overlook, by focusing on common risks that are more likely to occur?”

We replaced the signs of specific suspicious behaviour or situations at the airport by icons, which should provide a mental shortcut, in order to help the agents recognising the situations. A visual stimulus can lead to a risk decision response via a shorter route (Gigerenzer, 2015).

For the extensive training part with daily test items, we took photos of the security operation at the airport, along with the suspicious indicators: screenshots of the X-ray scanner and security scan, and photographs of hand luggage. For privacy reasons, we did not use any pictures of real passengers. Instead, students played the roles of passengers, and with photos from the public domain (Google) we were able to create a wide variety of ‘passengers’ as well as realistic test questions.

During the first workshop, conceptual knowledge was introduced in the form of cartoons of selected fallacies. No underlying theory was offered, we drew attention to the fallacy embodied in the cartoons. Then the agents were invited to link to their own practice and their own behaviour, in group assignments. The assignments focused on finding individual and collective answers to some key questions about awareness, responsibility, signalling, limiting conditions, professional reflection and teamwork. As a final group assignment in the second workshop, the agents were asked to optimise the airport security filter by redesign.

5. Design of the mixed methods study

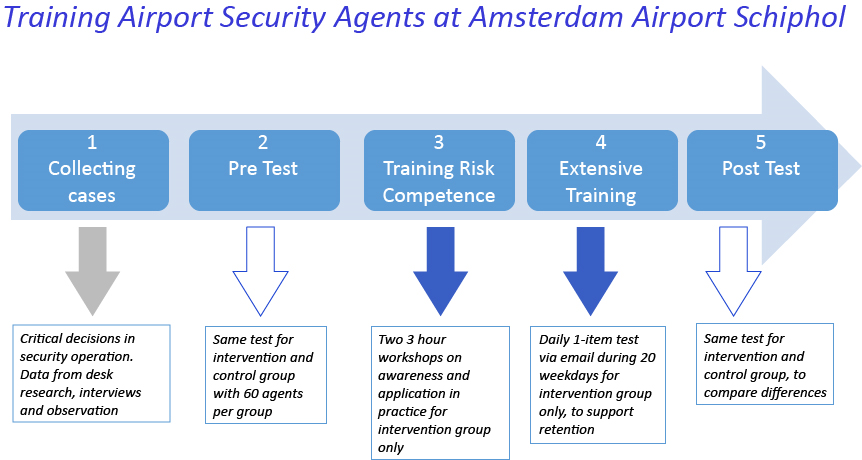

Figure 5 shows the five phases of the study, with preliminary study (1), pre- (2) and post-test (5), intensive (3) and extensive (4) training. The training interventions (3 and 4) were offered to the intervention group only, the tests (2 and 5) were performed by both the intervention and the control group. In our mixed methods study we also used triangulation, in order to obtain more balanced indications of the intervention effects (Creswell, 2013).

Interviews and context analysis

First we carried out a preliminary study (1), in the form of interviews, document analysis and observation of the security operation at one of the departure terminals of Amsterdam Airport Schiphol. With twenty-one iterative, semi-structured interview sessions with employees and managers of the airport security company, sampling all operational and management levels, we collected facts and opinions on working procedures, performance indicators, judgement and decision making, workplace optimisation. The interviews (30–60 minutes each) were recorded and analysed with respect to selected topics: (reported) key factors for success and critical competences; professional attitude; specific tasks, judgements and decisions; personal and team biases. With these key topics, in combination with information on working routines and procedures from document analysis (e.g. manuals and in company training materials), we selected the biases most likely to occur at the airport security check. This selection we used both for creating test items and workshop materials. We also made a study of the procedures and work instructions, the key performance indicators and the service level agreement between the airport and the security company. At the end of the preliminary study, we identified four professional operations, which were confirmed by the respondents as critical tasks, covering the complete process of judgement and risk decision making by the security agents (Figure 5).

Randomised controlled experiment

The experiment (Figure 4) was designed as a randomised controlled trial, with intervention and control groups, pre- and post-tests. For this study we drew a random sample of 10 percent of all security agents from the security company (n=120). This sample of 120 employees we assigned randomly to an intervention group and a control group (n=60/60). Prior to the training intervention, we developed two similar tests with nine specific (part 1) and six general risk decision items (part 2), as pre- and post-test. Part 3 of the tests consisted of questions on self-constructs (self-esteem and self-efficacy) and background variables. Intervention (IG) and control groups (CG) were both put to the pre- and post-test. Only the intervention group was offered an intensive and an extensive training in between the two tests, the control group did not receive any specific treatment (Figure 5). The intensive first part of the training intervention was offered to the agents of the IG in groups of 10 to 15 people, in a standard training room at the airport. The agents were taking part in two workshops of three hours each, with a short interval of less than two weeks. All workshops were led by the same two trainers from Utrecht University of Applied Sciences. After the workshops, we offered an extensive training to the agents of the IG. They received one test item per day via email, on weekdays, for a period of four weeks. Test items were similar to the nine specific risk decisions of the pre- and post-tests. In total the IG agents were asked to respond to 20 email items. We collected qualitative data from the interviews (Figure 4, step 1) and from the workshop sessions (Figure 4, step 3). During the sessions the members of the intervention group shared their professional opinions, both orally and written, both individually and in small groups.

6 Findings

In this section, we present the quantitative and qualitative results of the experiment.

Quantitative results

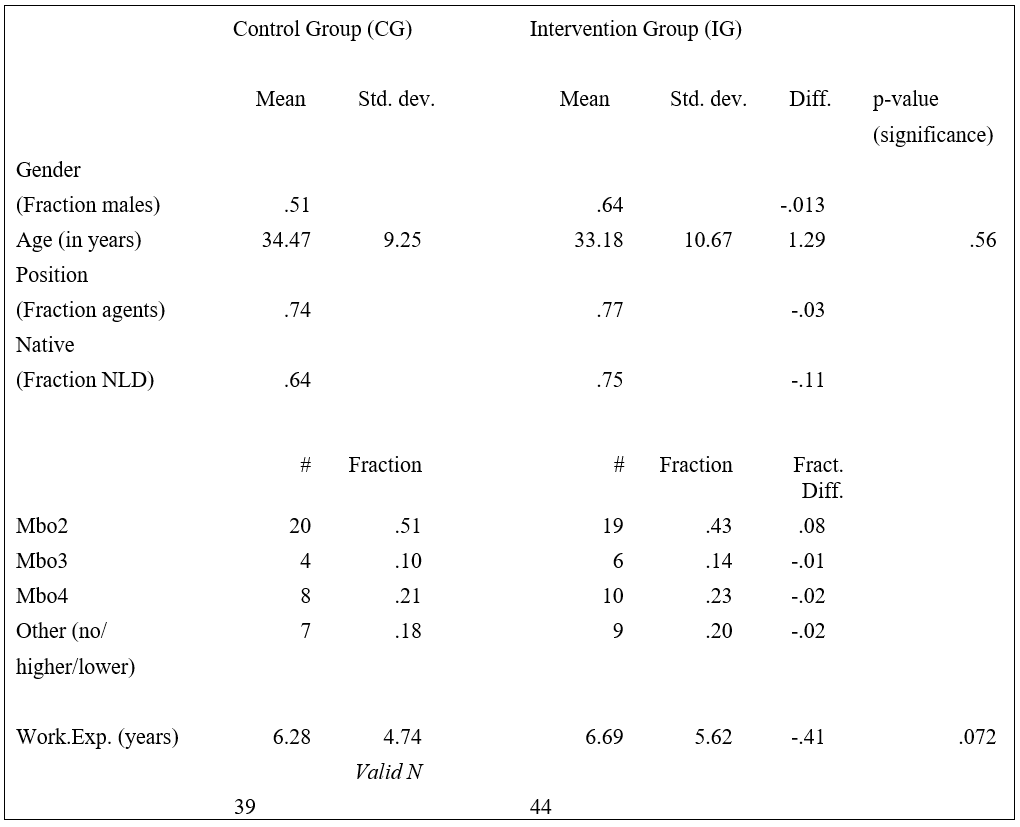

Table 1 shows descriptive statistics of the research groups, as well as the results of a number of independent t-tests on differences between the control group and the intervention group. The (categories of) variables are:

- Background characteristics: gender (fraction of males), age (in years), function (fraction agents vs team leaders), country of birth (fraction Netherlands – NLD vs other);

- Education and work experience: education level (ascending levels of Dutch intermediate vocational education; fraction mbo2, mbo3, mbo4 vs others) and work experience (in years).

There were no significant differences between the two research groups; this was an expected result of a randomised allocation of participants among the intervention and control groups. The intervention group contained more men (64% vs 51%) and more agents were native Dutch (i.c. born in The Netherlands: 75% vs 64%). Differences in average age (≈ 34 years) and position (≈ 75% agents vs ≈ 25% team leaders) were small. Professionals in the intervention group were higher educated and more experienced (+ .41 year on ≈ 6.5 years of experience in average).

Table 1. Comparison of Intervention and Control Group based on Post-test Results.

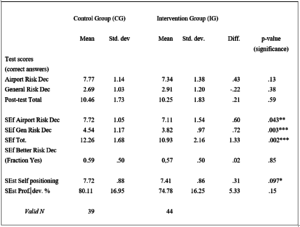

In Table 2 the two groups after the post-test are compared:

- Scores on the post-test: average number of correct answers to the specific risk decisions (airport security, 9 items) and general risk decisions (6 items), and the total of both categories (15 items).

- SEf is the indicator of professionals’ self-efficacy in the three categories (specific and general test items, and the total of both). Respondents estimated their own competence after completing the post-test. The fourth indicator of self-efficacy we used was a yes/no response to the question whether the agent felt she was making better risk decisions than she did two months earlier during the pre-test.

- Two indicators of self-esteem (SEst): self-positioning compared with colleagues (scale from low 1 to high 10), self-assessment on level of professional development (% 0–100).

Post-test scores on the risk decisions test did not show any significant differences between the research groups. Control group members had a higher score on specific airport risk decisions, and on the total of the post-test. The intervention group members’ score was higher at general risk decisions. All agents had a higher percentage of correct answers to the specific airport risk decisions (≈ 7.5 of 9 = 83%), compared with general risk decisions (≈ 2.8 of 6 = 47%). Significant differences occurred in the self-construct scores. In both groups, the self-efficacy scores were substantially overestimating the test scores on general risk decisions, the overestimation by control group members was almost twice as high as that of the intervention group members. Self-efficacy at specific airport risk decisions was almost accurate in both groups. The self-esteem scores (scale 1–10 position compared to colleagues and stage in professional development on a 0–100%-scale) were higher in the control group. Intervention group members were about 15 month younger, higher educated, more experienced (all in Table 1) and had a more modest self-esteem (Table 2).

Table 2. Comparison of Intervention and Control Group based on Post-test Results.

Significance on 10%, 5% and 1% level (*p<.10, **p<.05, ***p<.01)

Extensive training 1-item test

After the workshop sessions, the intervention group (valid n = 35) underwent extensive training for four weeks, with a daily 1-item test on weekdays. In that period, each participant received twenty questions in total (Figure 4). Of the 700 items that were deployed in this way, we received 381 correct answers (72%) of a tot al of 527 replies. On average, every agent answered 15 of the 20 questions, of which 11 (73%) answers were correct. That score was somewhat higher than the average 10 correct answers (68%) that the intervention group gave on the post-test. The 1-item tests consisted only of specific airport risk decisions.

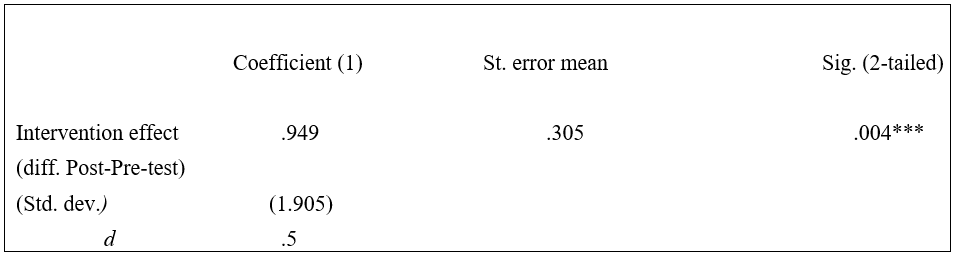

Table 3. Effect of Intervention ‘Security Performance’ on Intervention Group.

Significance on 10%, 5% and 1% level (*p<.10, **p<.05, ***p<.01)

Table 3 shows the effect of the intervention on the intervention group: there was a significant difference (.949) between the total test scores of post-test (10.25) and pre-test (9.30). The effect size, expressed as Cohen’s d, is .5. This measure indicates to what extend the training intervention has ‘made a difference’, by comparing the standardised means of pre-test and post-test of the intervention group. An effect size of .5 is usually regarded as a small to medium size effect of an intervention. According to Hattie (2009) .5 can be considered a medium to high effect, for educational interventions in particular. Due to too many missing values in the pre-test data of the control group, a valid difference in differences [(post-test minus pre-test of IG = .949) – (post-test minus pre-test of CG)] analysis was not possible.

Finally, the valid N in this study is smaller than expected. This was partly caused by operational issues: agents who were scheduled, but could not participate in the workshop sessions, for various reasons. No-shows are an issue too in the pre- and post-tests, as we saw in the extensive training of the 1-item test. The operational planning of the security company is a very complicated process. Furthermore, the energy level and motivation of an agent varied, depending on whether the 3-hour training was attended at the start of the working day or at the end of a night shift. As far as the quantitative part is concerned, we can conclude that the statistical evidence might be less strong than intended in an experimental design of a randomised controlled trial. The fact that we conducted our study in the practice of a running airport business is certainly the main reason for that conclusion.

Qualitative analysis

In the workshop sessions, we collected information on how agents reflect on their daily tasks, what problems they experienced with risk decision making and what kind of solutions they would suggest. Agents wrote their opinions and solutions on big sheets of paper, which were collected and analysed afterwards. In Tables 4–7 we summarise the most important topics and most frequent answers. Table 4 shows the most common answers to the question what agents do to be and stay sharp at their job, and what they need from others.

Table 4. How to stay sharp at the job?| What did the agents do to stay sharp? | What did agents say they need from others? |

|---|---|

| Do not be distracted and think for yourself | You must be able to count on colleagues, with support, collegiality and involvement; both co-workers and executives |

| Take responsibility for a healthy lifestyle (rest, sleep, nutrition etc.) | Positive and also constructive feedback, given in a sympathetic way |

| Be honest with yourself and colleagues, if you actually know that you are not sharp and fit with circumstances | Good briefings and good planning / team layout / compliance with times / rest periods |

| Incorporate recreation moments and humour | Pleasant working environment |

Reflection on the professional’s responsibilities at work indicates what they feel responsible for. Table 5 shows a summary of individual and collective input from the workshops.

Table 5. Feeling responsible at the job.| What did agents feel responsible for? | What did agents not feel responsible for? |

|---|---|

| Safety of colleagues and passengers | Passenger flow |

| Intercepting prohibited items | Operation of equipment |

| For yourself: commitment, motivation, quality of your work, arriving on time, staying respectful etc. | Failure and mistakes of others outside the team |

Table 6 shows what fallacies frequently occurred in daily practice, according to the participants of the workshops.

Table 6. Fallacies in daily practice.| What mistakes did the agents recognize in themselves and / or with their colleagues? | What typical examples did they share? |

|---|---|

| Focus on one item or subject (confirmation bias) | Focusing on a bottle with liquid from the X-ray scan; missing other suspicious contents of a bag |

| Making all types of assumptions (availability bias) | Colleagues from the security company do not need to be checked |

| Long-term work experience that can lead to automatism / routine leading to incorrect assessment / risk decision; (overconfidence bias, authority bias) | Knowing for sure that families from a certain country are no risk at all |

| Being influenced by available information in the news and social media (availability bias) | After an attack abroad focus too much on the modus operandi of the perpetrators of that incident |

| Blaming others for missing a sample test; particularly by team leaders and executives (hindsight bias) | Commenting on a colleague missing a test sample at the X-ray scan: “How could you miss it? This is obvious to everyone!” |

What professionals think actors at three levels (individual, team, company) could do to prevent fallacies and biased risk decision making, is displayed in table 7.

Table 7. Prevention of fallacies.| What could agents do themselves? | What could the team do? | What could the company do? |

|---|---|---|

| Take time to step back to assess the luggage / situation and see the complete picture | Give feedback, motivate and coach each other | Good communication and information |

| In case of doubt: check (again) | Talk to each other, give feedback, both positive and critical | Provide clear working procedures and refresh (keep them alive) |

| Accept help and ask for it if you are not sure | Actively point out risks and possible consequences to each other | Ensure good briefings and agreements on how information reaches everyone |

| Keep alert, curious and (self) critical | Continue training, motivating and keep remembering and refreshing good practice as a team | |

| Keep your background information updated (on attacks for example) | Give a colleague a break (after he or she missed a test sample, for example) | |

| Communicate clearly (with passengers, with colleagues) |

7 Conclusion

The study aimed to find an answer to the question: How and to what extent can we train professionals, in order to help them make smarter (i.e. more rational, effective and efficient) risk decisions? The main short-term goal of the intervention was to achieve a higher level of risk competence. The quantitative results showed a positive effect (.5) of the training intervention on the intervention group, which can be considered as a medium or high effect for an educational intervention (Hattie, 2009). This indicates that the complete intervention (workshops and tests) contributed to more awareness in the process of judgement and decision-making, and improved risk decisions by the security agents. The self-esteem and self-efficacy scores indicate that agents in the intervention group showed a more moderate, less biased self-evaluation. From the qualitative results, we conclude that agents were able to identify individual, collective and organisational points for improvement.

The intervention had a positive effect on the intervention group (Table 3; d = .5). Although this effect could not be purified by a difference-in-difference analysis. Even though there was no significant difference between intervention and control groups, the scores on general and specific test items at the post-test (Table 2; ≈2.8 and ≈7.5) can be used by the security company as an indication of an agent’s average risk competence. For setting standard values, the tests may provide anchors, as for the use in the recruitment and selection process of new agents in the future. However, they still need to be validated by replication.

The results indicate points of reference to expect that training such as this can be productive in various ways. Awareness can be considered as the first step on the road to better performance, and it may be assumed that awareness has been improved, both at the test scores and on self-esteem and self-efficacy. Even when intervention group self-efficacy and self-esteem scores appear to have decreased after the intervention, this could still mean that the agents were more conscious of their incompetence regarding biases.

The importance of good workplace conditions and encouraging leadership came up in all workshops, both in individual contributions and group discussions. This is an important perspective for the mid- and long-term success of a risk competence program. Where the process model (Figure 1) has proved to be adequate for evaluation of the risk management process itself, factors of organisational and managerial culture should be considered in a broader sense, since they may determine the circumstances under which judgement and risk decision making happens.

8 Discussion

Kahneman is not very optimistic about the possibility of improving people’s risk decision making competence. Gigerenzer on the other hand is convinced of educational strategies (like visualisation and heuristics) that are likely to lead to advanced risk competence (Bond, 2009; Kahneman, 2011; Gigerenzer, 2015).

In this study, the focus was on the design and testing of a training intervention for a specific professional setting. The scale of the experiment may be increased later, possibly in a modified setting. The quantitative results were modest. The effectiveness of the training in terms of statistical evidence and validity is not easy to demonstrate. Effectiveness could also be affected because the intervention was dependent on the operational planning of the security company and the airport. Sickness absence was one of the factors that caused complications, next to position, function or shift change of participants. In addition, there may have been variables outside our model and design, which therefore are not taken into account. As with many educational interventions, a Hawthorne effect could have occurred: participants in an experiment respond differently due to the fact that they are aware it is an experiment (De Bruyckere, Kirschner and Hulshof, 2015). In this case, this could be applicable to the intervention group, as well as to the control group. Furthermore, during the workshops we noticed that not all agents felt free to give their true opinion. Whether they were right or not, some agents expressed fear that their contribution to the sessions would be taken into account by the management, in one way or another.

Supporting a program of risk competence would benefit from improved internal communication. This starts by announcing a program and its backgrounds, and ends with communicating the achievements. Many agents were unaware of the training they were sent to, with consequences for their attitude at the start of the training intervention. Explaining the rationale and the meaning of a training intervention to participants before it starts, will make a big difference. It is also recommended to share the results of the experiment with the participants and the works council.

Cartoons and illustrations by MYRAAAB: Myra Beckers (myraaa.com) and Ab Bertholet.

Authors

Ab Bertholet, M.Sc., Lecturer, Researcher, Utrecht University of Applied Sciences (HU); Eindhoven University of Technology (TU/e), The Netherlands

Nienke Nieveen, PhD, Associate Professor, Netherlands Institute for Curriculum Development (SLO); Eindhoven University of Technology (TU/e), The Netherlands

Birgit Pepin, PhD, Professor of Mathematics/STEM Education, Eindhoven University of Technology (TU/e), The Netherlands

[vc_tta_accordion active_section=”0″ no_fill=”true” el_class=”lahteet”][vc_tta_section title=”References” tab_id=”1458134585005-b3f22396-5506″]

Ariely, D. (2008). Predictably Irrational. The hidden forces that Shape Our Decisions. New York: HarperCollins.

Bandura, A. (1997). Self-efficacy: the exercise of control. New York: W.H. Freeman.

Bertholet, A.G.E.M. (2016a). Exploring Biased Risk Decisions and (Re) searching for an Educational Remedy. EAPRIL Conference Proceedings 2015, 488‒499.

Bertholet, A.G.E.M. (2016b). Risico-intelligentie en risicovaardigheid van professionals in het veiligheidsdomein. Problemen bij het nemen van risicobeslissingen door denkfouten en beperkte gecijferdheid. Ruimtelijke Veiligheid en Risicobeleid, 7 (22), 60‒74. [Dutch]

Bertholet, A.G.E.M. (2017). Risk Management and Biased Risk Decision Making by Professionals. Educational video: https://youtu.be/4rWPppdJ3YQ.

Bond, M. (2009). Risk School. Nature 461 (29), 1189‒1191.

Bruyckere, P. de, Kirschner, P.A., & Hulshof, C. (2015). Urban Myths about Learning and Education. London: Academic Press.

Creswell, J. W. (2013). Research design: Qualitative, quantitative, and mixed methods approaches. Sage Publications, Incorporated.

Dobelli, R. (2011). Die Kunst des klaren Denkens. 52 Denkfehler, die Sie besser anderen überlassen. München: Carl Hanser Verlag. [German]

Dobelli, R. (2012). Die Kunst des klugen Handelns. 52 Irrwege, die Sie besser anderen überlassen. München: Carl Hanser Verlag. [German]

European Commission (N.d.). The European Qualifications Framework for Lifelong Learning. Brussels: European Commission.

Evans, D. (2012). Risk Intelligence: How to Live with Uncertainty. New York: Free Press.

Gigerenzer, G. (2003). Reckoning with Risk: Learning to Live with Uncertainty. London: Penguin Books Ltd.

Gigerenzer, G. (2015). Risk Savvy: How to make good decisions. London: Penguin Publishing Group.

Gigerenzer, G., & Martignon, M. (2015). Risikokompetenz in der Schule lernen. Lernen und Lernstörungen, 4 (2), 91‒98. [German]

Hattie, J.A.C. (2009). Visible Learning. A synthesis of over 800 meta-analyses relating to achievement. New York: Routledge.

Johnson, D.W., & Johnson, R.T. (1999). Making cooperative learning work. Theory into Practice, 38(2), 67‒73.

Johnson, D.W., & Johnson, R.T. (2009). An Educational Psychology Success Story: Social Interdependence Theory and Cooperative Learning. Educational Researcher, 38(5), 365‒379.

Judge, T.A., & Bono, J.E. (2001). Relationship of Core Self-Evaluations Traits—Self-Esteem, Generalized Self-Efficacy, Locus of Control, and Emotional Stability—With Job Satisfaction and Job Performance: A Meta-Analysis. Journal of Applied Psychology, 86(1), 80‒92.

Kahneman, D., & Tversky, A. (1979). Prospect Theory: An Analysis of Decision under Risk. Econometrica, 47(2), 263‒291.

Kahneman, D. (2011). Thinking, fast and slow. New York: Farrar, Strauss and Giroux.

Mulder, P. (2000). Competentieontwikkeling in bedrijf en onderwijs; achtergronden en verantwoording. Wageningen: Wageningen University. [Dutch]

Paulos, J.A. (1988). Innumeracy: Mathematical Illiteracy and its Consequences. New York: Hill & Wang.

Ryan, R.M., & Deci, E.L. (2009). Promoting Self-Determined School Engagement: Motivation, Learning, and Well-Being. In K.R. Wentzel & A. Wigfield (Eds.), Handbook of Motivation at School, (pp. 171‒195). New York: Routledge, Taylor & Francis Group.

Schön, D. (1983). The Reflective Practitioner. How professionals think in action. New York: Basic Books.

Valcke, M. (2010). Onderwijskunde als ontwerpwetenschap. Gent: Academia Press. [Dutch]

Vygotsky, L.S. (1997). The collected Works of L.S. Vygotsky: Vol. 4: The history of the development of higher mental functions. New York: Springer US.

Zimmerman, B.J. (1989). Models of self-regulated learning and academic achievement. In B.J. Zimmerman & D.H. Schunk (Eds.). Self-regulated learning and academic achievement. Theory, research and practice (pp. 1‒25). New York: Springer.

[/vc_tta_section][/vc_tta_accordion]